Please support us by subscribing to our channel! Thanks a lot! 👏

Welcome

Another sprint - done and done! This will be (as usual) an exhaustive review of our last (third) sprint. We have a LOT to cover, so let’s do the review!

Sprint Recap

Let’s set the stage for the review - by reminding ourselves the goal of this sprint:

Deploy Python components!

So how did we do?

Tasks

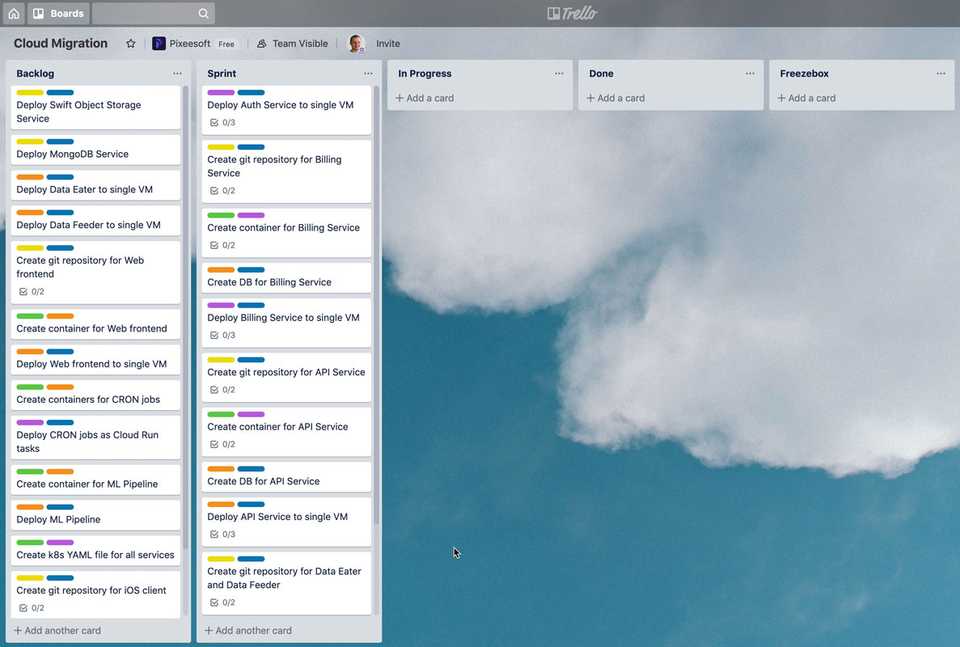

Let’s see what the scrum board looked like at the end of the planning - issues neatly piled, ready to be finished.

The total amount of work was 7.2 man/days - so for 7 day sprint a slightly optimistic forecast.

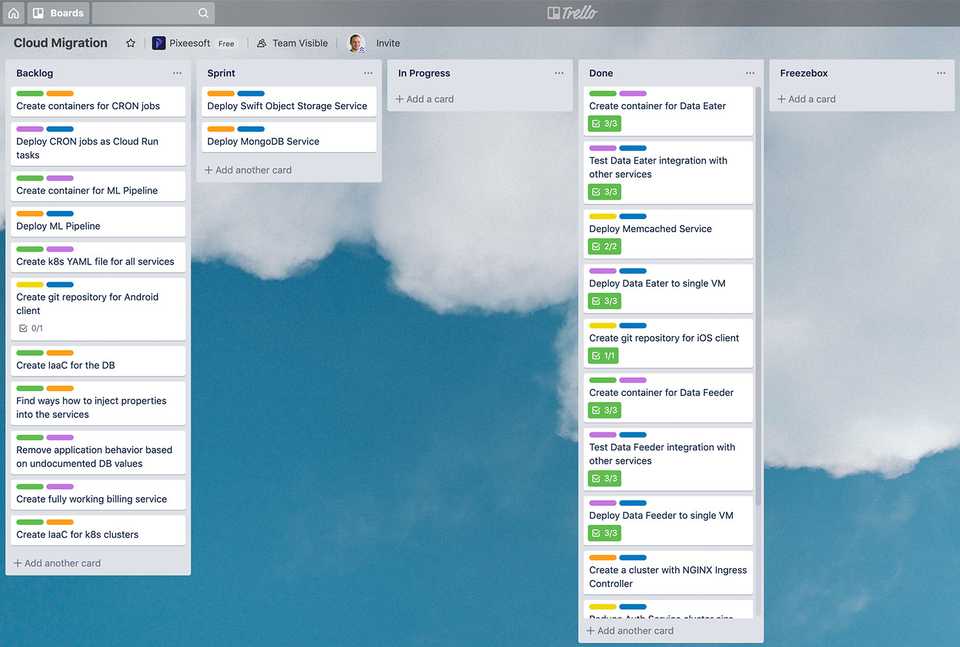

And this is the board after the sprint.

Some tasks were left behind, BUT! There are no tasks in the freezebox. That means we didn’t need to pivot and that all planned tasks were valid.

However - as usual, there were some unexpected tasks:

1. Reduce the size of the GKE clusters for the API services

The first one (the auth service) took slightly longer, because I had to try how small we can go and simply debug few things here and there. But the second one took a fraction of the time.

2. Create a cluster using NGINX Ingress rather than the provided using Google L7 Load Balancer.

That came with some issues, notably the managed certificate no longer works while using NGINX ingress - that is because the L7 LB uses the global IP, whereas NGINX uses a regional one. That means that the let’s encrypt provisioning has to be done from the inside of the cluster. So I had to deploy cert-manager from jetstack. But I’ve already done that before and it’s fairly easy. It just takes some time to set up.

3. Create a git repository for iOS client

As I was using the iOS client to test the websockets, I thought - well, I can just create the git repo now. So I did. And I also spent some time debugging the new API deploment - some things have changed, but that was a part of the “test data feeder integration” issue.

4. Deploy Memcached service

Last but not least, I had to deploy memcached service - at first I thought it would take a half of my day, but it was actually just few clicks away. So that’s done.

In total that’s approximately 7.8 man/days in 7 days of work. It is slightly harder to tell some tasks apart - like deploying in a VM and testing the deployment. There is overlapping agenda so basically if I subtract some effort - we’re on track, heading in the right direction with the right speed.

Technical Debt

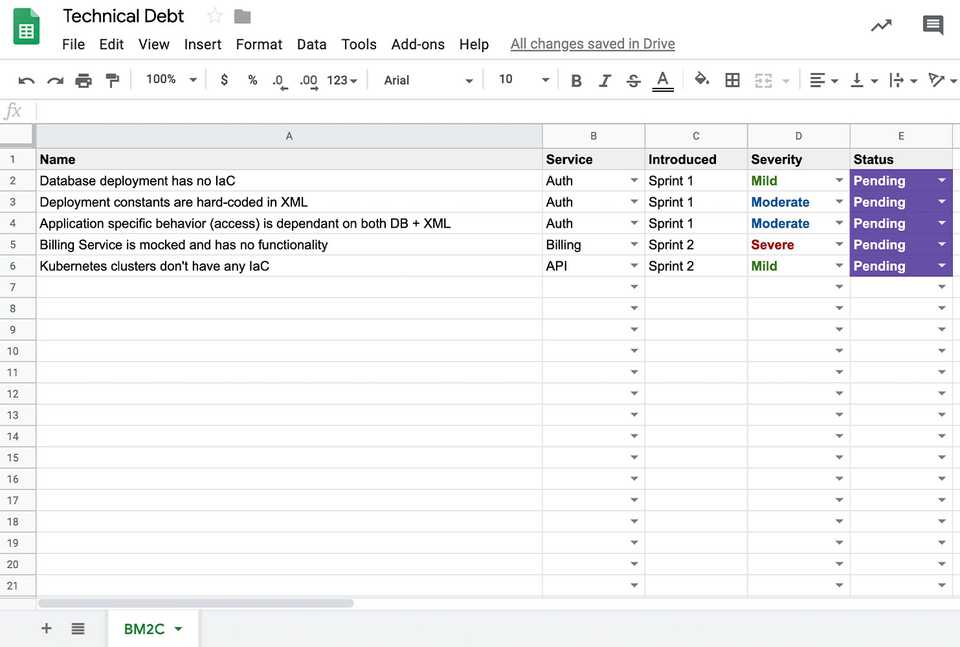

So what about the technical debt? Well, I haven’t had the time to address anything that was already in the list and I introduced some new issues.

I can see a pattern emerging. But that’s also part of the developer’s life. You deliver something new so that you can come back to it and rewrite it.

- Memcached service was deployed by clicking in the GCP portal.

- Both clusters for data eater and feeder respectively use NGINX ingress controller that doesn’t come natively with kubernetes. There were some helm/tiller commands being run and some special deployments for the cert-manager. All of this done manually. And so it should get addressed at some point - by creating terraform recipes so that the whole infrastructure can be set up automatically.

- Just like the API services have their respective constants defined in the code, the python service has that as well. Some IP addresses and ports are hardwired in the codebase. I should probably write that into the documentation so that it doesn’t get lost.

Done

So with regards to the goal of the sprint - did we achieve what we set out to do? I would say YES - the moment I managed to make the services talk to each other and I could see live data on the website and the mobile app, I literally lifted my arms above my head as though I scored a goal. It was really a magical moment when all pieces just fell into place. And I couldn’t be happier, because everything else now is just a technicality. The core infrastructure is working.

But the battle is far from over. We need to deploy persistence and intelligence on top of the data and we haven’t even scratched the surface with budgets and billing. What if it’s too expensive to run in the cloud after all? Who knows…

K.A.L.M.

Let’s start a brand new section of the review - KALM:

Something to …

- Keep

- Add

- do Less of

- do More of

Let’s start with Keep - I definitely want to keep the livestreams. It’s a great compromise between delivering value and not spending the entire day on production.

Add goes hand in hand with the keep. I haven’t been really structured about the beginning of our daily scrum. Sometimes it’s at 9:30, sometimes at 11. And goodness me, once it was at 3PM. I think I’d like to add a schedule - let’s say - every day at 10:15 in the morning, or something like that - put it in the calendar, schedule the stream ahead in YouTube studio and just go live at the given time.

Less - Instagram - I downloaded the video after each stream, created a vertical version of it and published on IGTV. And despite tagging it properly, posting about it and reposting. It just never got any attention. I think perhaps IGTV is not the most suitable platform, at least for now. I might just avoid it in the upcoming sprint altogether.

More - talk to people. Sometimes I get stuck and I try to solve everything in a stubborn fashion. When I actually just need to talk to somebody - not necessarily ask them the solution, but tell them about the problem - like rubber ducking. Trying to tell somebody else what your problem is. Eventually in 99% of cases, you’ll be able to figure it out yourself. Really. So that’s what I want to do more of - talking to people.

Conclusion

So there it is, our sprint review. We went through the tasks, technical debt and changes we want to address in the next sprint.

But let’s hear from you now - when was the last time you made sure you asked your engineers about what they want to do less and more of? Do you do this often or not often enough? Let me know on any social platform - facebook, twitter or instagram!